I recently encountered a use-case that required the management of multiple long-running backend tasks while also necessitating granular updates on the state and progress of each task. The feature was to be integrated into an existing Django codebase and our team desired a UI with multiple progress bars. Ultimately, I couldn’t find a tutorial covering quite what I was looking for, so my purpose here is to create the type of code demo and tutorial that I would have wanted for my specific needs, when beginning this journey. One popular tool for Python developers is Celery, a ‘task queue’ which facilitates the queuing and concurrent execution of multiple tasks (i.e. any manner of Python function you may need to run). Celery-Progress, is a great Python package for – you guessed it – easily provisioning progress bars to monitor Celery tasks.

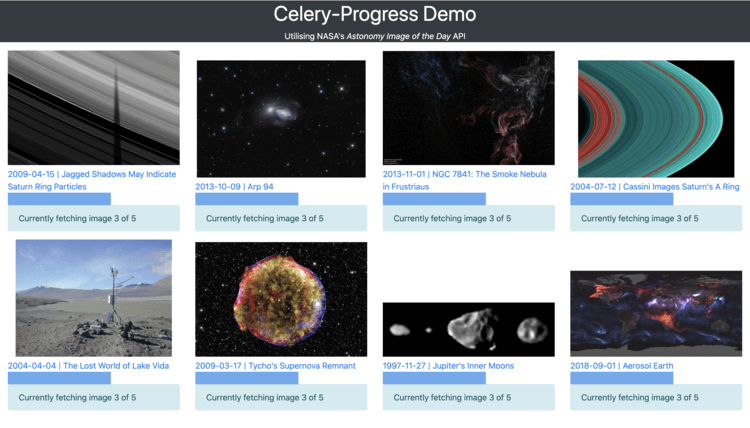

So, what will this app cover? At a high level, the creation of a Django app which concurrently runs eight backend processes and provides state updates via progress bars and related metadata. In terms of showcasing Celery, I opted to go with something visually stimulating and poll the NASA Astronomy Image of the Day API forty times. Querying an API once is of course a short task on its own, but the logic outlined in this demo could be applied to any long-running task. If you’d rather jump straight into the finished codebase, you can clone a working example directly from GitHub. Below is an example of the project we will be building, and you can check out a screen-recording of the app in progress here.

For those wishing to jump to a specific topic, the topics are structured in the following order:

For those wishing to jump to a specific topic, the topics are structured in the following order:

- Web Application Architecture

- Initial Django Configuration

- Configuring Celery and Celery-Progress

- Frontend Logic and Templates

- Running the final app and general parting thoughts

Web Application Architecture

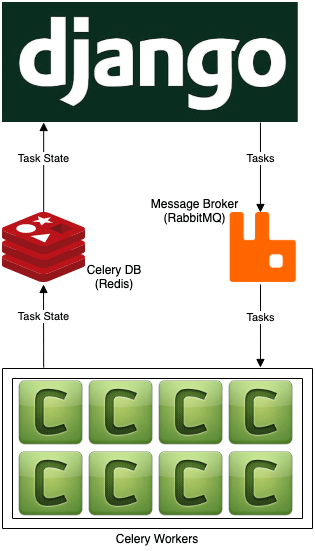

This web app is built with the Django framework. It uses a NASA API to retrieve random images and image metadata. The processes of concurrently/repeatedly polling this API is managed by a task queue (Celery) which queues tasks via a message broker (RabbitMQ) and writes the state of each task to a database cache (Redis). The visualization of the tasks is managed by a Python package named celery-progress. This package, though written in Python, uses JavaScript on the frontend to poll our Redis cache for the current state of our Celery tasks. Here is a high-level overview of the architecture we’ll be implementing:

Initial Django Configuration

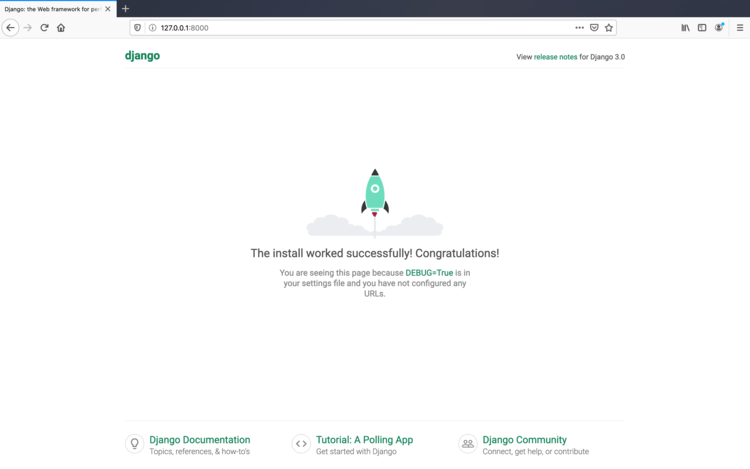

The following commands will create a Python virtual environment with all the necessary packages, before creating a new Django project and app, and starting a webserver to host the project. Once you have run the above commands, navigate to http://127.0.0.1:8000/ in your web browser and you should see the following:

Configuring Celery and Celery-Progress

Having provisioned a new Django project, we can now begin adding our desired functionality. First off, let’s configure celery and celery progress. Create a new file called celery.py within our Django project (ie. celery_demo/demo_project/demo_project/celery.py).

In order to track the state of our tasks, it’s necessary to configure Celery’s result backend. If we were setting this value via a config file, we would use results_backend, but since we’re setting it directly when initializing our Celery app, we can just set the value via a parameter named backend. If you have already had some exposure to Celery, one design choice that may stand out to you is the use of RabbitMQ for Celery’s message broker but not for the results backend.

I’ll approach this topic with both a short answer and a longer answer for those wishing for a lower-level explanation.

Short Answer: Due to how the celery-progress package works, asynchronous tasks will fail with RabbitMQ but will work with Redis.

Long Answer: Celery has both an Advanced Message Queuing Protocol (AMQP) and a Remote Procedure Call (RPC) backend. This blog post provides a good explanation of the design in terms of the limitations of each backend with regards Celery. Celery’s AMQP backend is now deprecated though and its documentation advises the RPC backend for those wishing to use RabbitMQ for their results backend. The issue with the RPC backend is that, in the words of Celery’s documentation, “it doesn’t actually store the states, but rather sends them as messages. This is an important difference as it means that a result can only be retrieved once, and only by the client that initiated the task.”. As a consequence, celery-progress fails to successfully pull all task states once the user adds multiple concurrent tasks.

An alternative for our Celery backend, that will support concurrent tasks, is Redis. This combination is framed as a ‘popular choice’ in celery’s documentation as RabbitMQ is less prone to data loss. In terms of choosing a message broker, Celery’s documentation warns “Redis is also feature-complete, but is more susceptible to data loss in the event of abrupt termination or power failures.” Depending on your use-case, Redis or RabbitMQ alone could be a good fit. We’re going to use both for this scenario. The simplest way to provision Redis and RabbitMQ is via Docker. You can pull a Redis image and a RabbitMQ image from Docker Hub and provision a docker container by running this single command in your terminal:

docker run -d -p 5672:5672 rabbitmq docker run -d -p 6379:6379 redis

Now that we’ve created our celery.py file, it’s necessary to update our project’s init file (demo_project/__init__.py) so that our celery app is imported when Django starts up. For those seeking a deeper insight, this Celery/Django configuration is based off the Celery tutorial First Steps With Django.

The final piece of the Celery setup is to create the function for our logic. Let’s create a file called tasks.py inside our Django app (ie.celery_demo/demo_project/demo_app/tasks.py)Our task could potentially have done any manner of work. It seemed more fun though to do something more interesting than, say, counting to 100. Our task will be retrieving random images from NASA’s Astronomy Picture of the Day API. See api.nasa.gov for further info and to generate your own API key. As the API sometimes links to YouTube videos and interactive web pages, it makes sense from a visual perspective for us to only link image files. The task itself is relatively straightforward. Iterate through a numerical range and for each iteration do the following:

- Generate a random date within a valid date range

- Retrieve an image for this date

- Check if the image is valid and repeat steps 3 + 4 if necessary, until a valid image file is retrieved

- Update Celery’s progress with an image URL and metadata

One cool feature of note is that metadata is being passed back in JSON format. With Celery configured and our celery task written, we can now build out the Django frontend. The first step is integrating celery-progress; a Python package that manages the polling of celery’s results backend and visualizes it with progress bars. Celery polls Redis every 500 milliseconds, updating the progress bars on if necessary.

First off, let’s update our projects urls file (demo_project/demo_project/urls.py) with the following

In our project’s settings file (celery_demo/demo_project/demo_project/settings.py), we need to add demo_app, celery, and celery_progress to the INSTALLED_APPS variable in our settings.py file

We also need to update our project’s views file (ie. celery_demo/demo_project/demo_app/views.py)

Here we are adding a new view which will call our Celery task eight times. Each individual Celery task will then poll NASA ‘s API five times. In order for us to track the progress of each task on the frontend, it is necessary to return the id of each Celery tasks. I have chosen to go with a dictionary data structure as it allows us to iterate easily through multiple concurrent task ids. The logic iterates from 0 to 7, creating a dictionary which maps each number in the range to its corresponding Celery task.

Frontend Logic and Templates

Within our Django app, create a file named urls.py (ie. celery_demo/demo_project/demo_app/urls.py). The purpose of this is to map a template file to the URL path. In our case it’s our webapp’s root directory (ie. What is displayed at http://127.0.0.1:8000/).

The next logical step is to create our template file. Within the Django app’s templates folder, create another folder named demo_app and a file named index.html within it. (ie. celery_demo/demo_project/demo_app/templates/demo_app/index.html). The level of nesting in this file path may seem excessive but the namespacing is an important discipline that would become apparent if this Django project had another app containing a template named index.html. From the Django documentation, Django will choose the first template it finds whose name matches, and if you had a template with the same name in a different application, Django would be unable to distinguish between them.

Our template will be somewhat involved, so now is a good time to check and ensure that everything is working as expected. Let’s write the text ‘Hello World’ to this template file and confirm that everything is working ok by checking our webapp in the browser. Run python3 manage.py runserver in your terminal and you should see something like this in your browser at http://127.0.0.1:8000/.

Now let’s fill out our index.html template properly

There are a three main parts to this template: CSS for styling everything , Python logic written in Django’s template language, and JavaScript logic for integrating celery-progress and dynamically updating relevant HTML elements.

- In the head of the file the dependencies for the Bootstrap CSS framework are imported. A custom CSS class selector is necessary in addition to Bootstrap’s default stylesheets. The purpose of this class selector is to ensure that all eight images are uniform in height and width, regardless of the dimensions of the source images.

- The code for generating the visual content that the user will see is all nested within one Bootstrap container. Our view file has already passed a Python dictionary named celery_task_ids to this template. The code iterates through this dictionary and dynamically generates the raw HTML that displays the images.

- Most of the JavaScript that the celery-progress package requires is already configured by default. We require two additions. The first is to add event listeners so that celery-progress begins polling Redis for task-state updates as soon as the DOM loads. It is also necessary to override the default onProgress function which celery-progress calls each time a task’s state updates. It is necessary to override this as the HTML used to display our images differs from the celery-progress default; we are updating each element with an image and each image’s metadata.

With our template complete, we are now ready to view the finished product. First let’s start up Celery:

python3 -m celery -A demo_project worker -l info –concurrency=8

Where this command is run from is important. It should be run from within this project root folder (ie. celery_demo/). Note that a concurrency flag is included and set to eight. Celery defaults this value to the host machine’s number of cores, so if I was to run this on a four-core machine without the concurrency flag set, it would only run four concurrent tasks at a time.

Finally, if it isn’t already running, start the Django webserver:

python3 manage.py runserver

Navigate to http://127.0.0.1:8000/ in your web browser and enjoy 40 unique images from Nasa’s Astronomy Picture of the Day. For reference, here’s a screen-recording of the web app in action, and if your web app failed to work as expected, you can of course clone a working version from GitHub.