Machine learning (ML) is now widely adopted across multiple industries. Whether it’s telecom providers using it to predict network maintenance, drug companies using it to help identify diseases or simply retailers using it to optimize pricing; it’s undeniably everywhere. Just like Rome, these five pillars were not built in a day. Let’s start by having a quick look back at how ML has evolved over the decades. For those who work in ML, it is a fascinating field where advances are made daily. Over the last 25 years, we have seen the performance of ML algorithms go from beating the world leader at chess (Deep Blue in 1997) to winning at Go (alphaGo in 2016). ML has even become widespread in healthcare with Microsoft’s project InnerEye helping medical decision-making, and alphaFold helping to understand protein structure. Today, my iPhone even unlocks by recognizing my face. When building ML models, some data scientists will try to run before they can walk, and as a result, get limited success. In this blog, we will explore the five fundamentals of best practice Machine Learning (ML). These fundamentals, or pillars, uphold Machine Learning best practices.

1. Don’t be a lone wolf

Make your code run easily like your wolfpack  Make your work easily repeatable, without changing a line of code. The world of machine learning, and programming in general, is highly collaborative. Many practitioners actively promote open-source code and freely publish their methods and results. This is great for those looking to expand their skills. Even if your code is in an internal repo or just a collection of notebooks, you are always going to be collaborating with peers, teammates, or other stakeholders. If you are sharing a notebook, script, function or whatever it may be, it should run without errors! Often when trying to adapt the code of a colleague, a brand-new ML model from a research paper, GitHub or wherever it comes from, you will clone the code into your environment, click run, and… it doesn’t! You then need to spend minutes, hours, and days trying to track down why, oh why doesn’t this code work. After following up with your co-worker, correcting a couple of lines, rewriting the config, and installing new/old versions of libraries that weren’t mentioned in the requirements documents, you may perhaps have a model that does what it is supposed to! This is frustrating, wastes time, and makes people not want to be part of your wolfpack. There are a lot of automated tools that help you implement automatic tests on your code (see continuous integration/deployment (CI/CD)) so that your model works on any machine. You can also build your model in a docker image that you share. The bottom line, though, is to make sure your code plays ball for all who may want to use it.

Make your work easily repeatable, without changing a line of code. The world of machine learning, and programming in general, is highly collaborative. Many practitioners actively promote open-source code and freely publish their methods and results. This is great for those looking to expand their skills. Even if your code is in an internal repo or just a collection of notebooks, you are always going to be collaborating with peers, teammates, or other stakeholders. If you are sharing a notebook, script, function or whatever it may be, it should run without errors! Often when trying to adapt the code of a colleague, a brand-new ML model from a research paper, GitHub or wherever it comes from, you will clone the code into your environment, click run, and… it doesn’t! You then need to spend minutes, hours, and days trying to track down why, oh why doesn’t this code work. After following up with your co-worker, correcting a couple of lines, rewriting the config, and installing new/old versions of libraries that weren’t mentioned in the requirements documents, you may perhaps have a model that does what it is supposed to! This is frustrating, wastes time, and makes people not want to be part of your wolfpack. There are a lot of automated tools that help you implement automatic tests on your code (see continuous integration/deployment (CI/CD)) so that your model works on any machine. You can also build your model in a docker image that you share. The bottom line, though, is to make sure your code plays ball for all who may want to use it.

2. Mark Your Territory

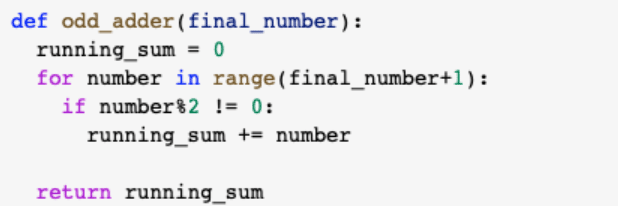

Comment your code for yourself and others  This point builds on the last; you must comment your code. If you want to share your code with colleagues, clients or the wider world and you want these people to build on your work, they need to understand it (and you need to understand it too when you refer back to it)! Imagine you have to implement a function. If you have decided to play code golf, use one-liners, or just generally like making neat tight code (i.e., not very many lines of code, not too many words), you might end up with code that looks like this:

This point builds on the last; you must comment your code. If you want to share your code with colleagues, clients or the wider world and you want these people to build on your work, they need to understand it (and you need to understand it too when you refer back to it)! Imagine you have to implement a function. If you have decided to play code golf, use one-liners, or just generally like making neat tight code (i.e., not very many lines of code, not too many words), you might end up with code that looks like this: ![]() On the other hand, if you like to keep things verbose, it might look like this:

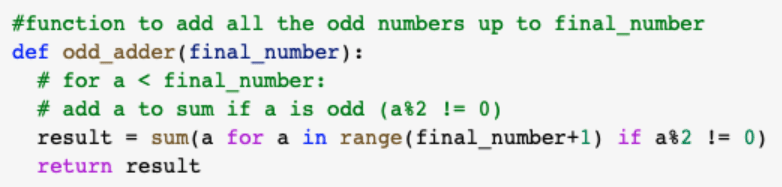

On the other hand, if you like to keep things verbose, it might look like this:  While there are pros and cons to both approaches, they both suffer from a lack of interpretability. If you pulled off a sneaky trick to optimize a routine, had to bash through a complicated API to implement a data loader, or developed a brilliant new neural network architecture, your co-workers might not understand what you did or why you did it. What is potentially even worse is the possibility that you might not understand it when you come back to look at it in the future. A good practice is to put in a comment between each section of your code to explain what it does and why. Something like this is more in line with best practice.

While there are pros and cons to both approaches, they both suffer from a lack of interpretability. If you pulled off a sneaky trick to optimize a routine, had to bash through a complicated API to implement a data loader, or developed a brilliant new neural network architecture, your co-workers might not understand what you did or why you did it. What is potentially even worse is the possibility that you might not understand it when you come back to look at it in the future. A good practice is to put in a comment between each section of your code to explain what it does and why. Something like this is more in line with best practice.  When you share your code with collaborators, make sure you have commented on your code or in other words, marked your territory.

When you share your code with collaborators, make sure you have commented on your code or in other words, marked your territory.

3. Share Your Kill

Share data and keep yours tidy  Whew, okay, so you have some reproducible code that is easy to understand. That’s a pretty good start for any project. Your co-workers know what you have done and why, and they can make it happen on their machine with minimal messing around (Ideally with some commands like…: git clone my_great_repopython my_great_repo/main.py) Well, this is good… but for ML projects, the main ingredients are code and data. If your code involves training a new model, measuring how well it works, or whatever the case may be, it needs datasets to do these things. While retraining your model might sometimes be overkill, if someone wants to demonstrate how well it works compared to their model they will benefit from your data with a side-by-side comparison. Another aspect to ensure that your results are robust and reproducible is to ensure that you implement an appropriate train/ validation/ test split on your data. As, for many projects, the main training data is proprietary, you cannot always share it, so practitioners should share an analogous public dataset. Otherwise, you are telling people to simply trust you that it works. It is fundamental that these three datasets are independent. Without going down a rabbit hole of how your training data should sample your whole dataset and with exceptions, the generic case is that you should train your model with one dataset, optimize the hyperparameters with another, and test it with a third. There should also not be an overlap between these datasets. Otherwise, you are cheating and your model will not work in production as you expect! When you share your code, share the public data for it and the code to make independent training datasets.

Whew, okay, so you have some reproducible code that is easy to understand. That’s a pretty good start for any project. Your co-workers know what you have done and why, and they can make it happen on their machine with minimal messing around (Ideally with some commands like…: git clone my_great_repopython my_great_repo/main.py) Well, this is good… but for ML projects, the main ingredients are code and data. If your code involves training a new model, measuring how well it works, or whatever the case may be, it needs datasets to do these things. While retraining your model might sometimes be overkill, if someone wants to demonstrate how well it works compared to their model they will benefit from your data with a side-by-side comparison. Another aspect to ensure that your results are robust and reproducible is to ensure that you implement an appropriate train/ validation/ test split on your data. As, for many projects, the main training data is proprietary, you cannot always share it, so practitioners should share an analogous public dataset. Otherwise, you are telling people to simply trust you that it works. It is fundamental that these three datasets are independent. Without going down a rabbit hole of how your training data should sample your whole dataset and with exceptions, the generic case is that you should train your model with one dataset, optimize the hyperparameters with another, and test it with a third. There should also not be an overlap between these datasets. Otherwise, you are cheating and your model will not work in production as you expect! When you share your code, share the public data for it and the code to make independent training datasets.

4. Make it easy to add wolves to the pack

Modular code is better  When you build your model/ data loader/ trainer, or whatever the case may be, you want to stick with good object-oriented principles. You should be able to chop and change things easily without requiring changes to the base code (e.g. change a loss function without going into your model code to do so, simply model.loss = my_custom_loss, or something to that effect). This will make your life easier for the sake of experiments and development, but also make your collaborators’ lives less stressful and make iterations faster, producing better models that make more sense (and are also more interpretable).

When you build your model/ data loader/ trainer, or whatever the case may be, you want to stick with good object-oriented principles. You should be able to chop and change things easily without requiring changes to the base code (e.g. change a loss function without going into your model code to do so, simply model.loss = my_custom_loss, or something to that effect). This will make your life easier for the sake of experiments and development, but also make your collaborators’ lives less stressful and make iterations faster, producing better models that make more sense (and are also more interpretable).

5. Maintain a groomed coat

Your code should make something pretty  This might seem basic, but it ties into making it easy for others to understand what you’ve done. It might be for your weekly meeting with your supervisor, presenting to stakeholders, or even pitching to investors, but if you can build into your modular, reproducible, well-commented code a function to produce some nice example outputs, your code appears more effectively. Receiver operator curves are great and loss/ epoch graphs might be interesting, but if you don’t show me what your model is supposed to do, I might just turn off and stop paying attention. It also helps you make sure you know what it is doing and aids your collaborators too. These steps are the fundamentals of good ML practice or any good coding practice. I understand that sometimes best practices can seem monotonous, obvious, or even time-consuming, but if you want your team to collaborate and work as a unit, or like a pack of arctic wolves, you should follow this advice.

This might seem basic, but it ties into making it easy for others to understand what you’ve done. It might be for your weekly meeting with your supervisor, presenting to stakeholders, or even pitching to investors, but if you can build into your modular, reproducible, well-commented code a function to produce some nice example outputs, your code appears more effectively. Receiver operator curves are great and loss/ epoch graphs might be interesting, but if you don’t show me what your model is supposed to do, I might just turn off and stop paying attention. It also helps you make sure you know what it is doing and aids your collaborators too. These steps are the fundamentals of good ML practice or any good coding practice. I understand that sometimes best practices can seem monotonous, obvious, or even time-consuming, but if you want your team to collaborate and work as a unit, or like a pack of arctic wolves, you should follow this advice.

[km-cta-block padding=20 label=”Get in touch” block-classes=”has-white-colour” image=”http://procogia-main-site-co-uk.stackstaging.com/wp-content/uploads/2021/09/data-science-1.jpg” background-position=”top right” background-size=”cover” ] To find out more about the benefits of Data Consultancy [km_button link=”http://procogia-main-site-co-uk.stackstaging.com/contact/” classes=”cta-2″]Contact us[/km_button] or [km_button link=”tel:+14252307396″ classes=”cta-2″]Call us on 1 425-230-7396[/km_button][/km-cta-block]