Diabetes is a chronic disease that occurs when a patient’s blood glucose is higher than the normal level. Elevated glucose could be caused by defective insulin secretion, its impaired biological effects, or both. Projections suggest that in 2040 over 600 million patients will be diagnosed with diabetes, implying that one in ten adults will experience the ill effects of diabetes. There is no doubt that this alarming figure needs great attention. With the rapid development of machine learning, we will see how we can apply modern techniques to diagnose diabetes.

I will use a real world dataset based on Pima Indians provided by Kaggle. Let’s see how we can apply a classification algorithm and identify if a person is diabetic or not.

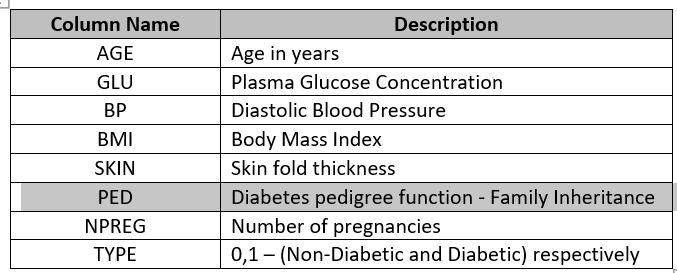

The dataset consists of 8 features measured for 332 patients . Below is the description of each feature:

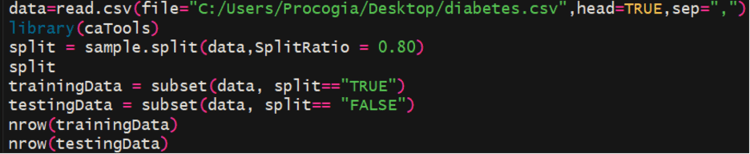

I start by splitting the data into two distinct subsets – one I will use to train my models and the other for testing the accuracy of the models. I choose a training to testing ratio of 80:20, a common best practice for training machine learning models. Here is some R code for reading within my data and creating the training and testing sets:

I start by splitting the data into two distinct subsets – one I will use to train my models and the other for testing the accuracy of the models. I choose a training to testing ratio of 80:20, a common best practice for training machine learning models. Here is some R code for reading within my data and creating the training and testing sets:

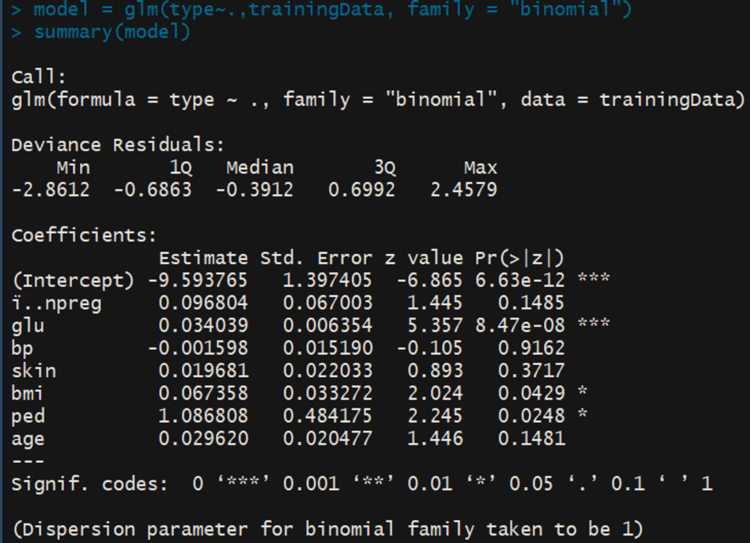

After the data is split, let’s train a logistic regression classifier using the training data.

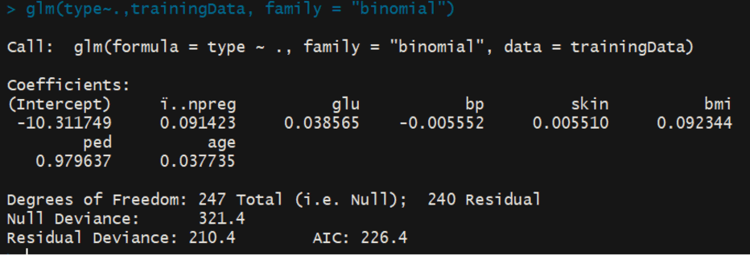

With the model trained, let’s also look at the summary using the following code:

This summary shows a lot of information which is helpful to understand the internal workings of a logistic regression model.

Let’s break it down into the details for a better understanding:

The coefficients in a nutshell show all the features and signify how important they are. The stars(*) on the extreme right of each feature show the statistical significance of that feature. For example, the p-value for feature glu is very close to 0. Naively, we can say that glu, ped and bmi are the three most important features in order.

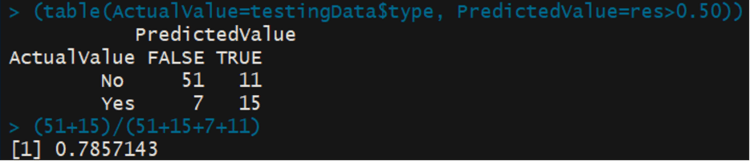

Let’s check the accuracy of this model by constructing a confusion matrix from the testing data. We can see that 51 cases were correctly classified as non-diabetic, while 15 cases where correctly classified as diabetic. This gives an accuracy of 79% which isn’t too bad for a first attempt. It is concerning, however, that nearly as many non-diabetic patients would be diagnosed with diabetes under this model, a false positive rate that would be unacceptable in practice.

Model Optimization:

Model optimization is an important aspect of choosing the best parameters for machine learning models. At ProCogia, we commonly spend a significant amount of time and effort to ensure the model parameters are appropriately tuned. Since every case is different, each has a unique set of challenges while performing optimization.

Before we begin, I should define the concepts of Null deviance, Residual deviance and AIC:

Null Deviance: Null deviance shows how well the response variable is predicted by a model that includes only the intercept

Residual Deviance: Residual deviance shows how well the response variable is predicted with the inclusion of independent variables. The lower the deviation, the better the accuracy. We need to make sure that the residual deviance does not increase while performing model optimization.

AIC: The Akaike information criterion (AIC) is an estimator of the relative quality of statistical models for a given set of data. This should decrease while performing optimization.

From the summary of the model, we have concluded that there are three important features, glu, bmi, and ped. It is possible that one or more of the other features is causing our model to suffer. Having said that, we cannot directly remove the unimportant features. We will have to check if the residual deviance and AIC is impacted by removing a feature. If the values of the residual deviance and AIC increase, we should not remove the feature from the model.

Also, for reference, let’s keep a note of our current models.

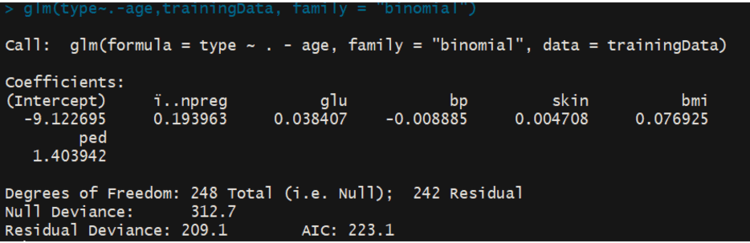

Let’s start by removing the unimportant features and making sure that the Residual Deviance and AIC do not increase.

Removing Age:

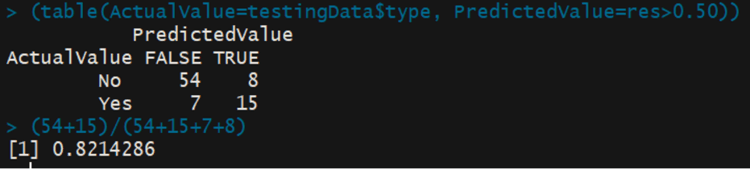

Performing the same process for the other three features, we find that the only other feature that could be removed was skin. Therefore, a new model was trained without the age and skin feature. Let’s check if the accuracy has increased from 78%:

Performing the same process for the other three features, we find that the only other feature that could be removed was skin. Therefore, a new model was trained without the age and skin feature. Let’s check if the accuracy has increased from 78%:

It has, and we were able to correctly classify three patients who were previously showing up as false positives. In this way, we can similarly test and tune any other parameter of our model.

In conclusion, machine learning methods are widely used in predicting diabetes. Early detection of diabetes is crucial. Model optimization is an essential part of accurately predicting diabetes. We optimized the accuracy from 78% to 82%. As data scientists, we must constantly be looking for ways to redefine our models to obtain the most accurate outcomes.