The Unprecedented Growth of Large language models

Large language models (LLMs) have seen unprecedented growth in their development over the past year as OpenAI’s ChatGPT has broken the record for the fastest-growing consumer application in history, as reported by UBS. Similar offerings have been released by tech giants, namely Google, Meta, and Microsoft, as well as from a host of rapidly growing startups such as Anthropic and Cohere. This pace of innovation within not only technology, but the field of artificial intelligence, has led to significant breakthroughs, as both corporations and countries strive to be at the forefront of this new era. However, this innovation has largely outpaced regulation, bringing with it nuanced ethical questions that have no concrete answer. As a result, companies have been hesitant to adopt these technologies in production environments, as can be seen from the results of this survey conducted by Andreessen Horowitz, a California-based venture capital firm.

The Accessibility and Scrutiny of AI

Never before has it been this accessible to leverage the capabilities of AI for both creation and development, with no field left untouched by the potential of Generative AI. Consequently, there has also never been as much of a spotlight on AI and its development. The rapid rise of LLMs has generated intense scrutiny of their shortcomings, namely their tendency to hallucinate and produce biased, toxic, or otherwise unethical responses. To aid in countering these weaknesses, tools to detect LLM vulnerabilities have been developed. One such tool is Garak. Garak is an open-source LLM vulnerability scanner which performs targeted tests to expose flaws and vulnerabilities of an LLM system, also known as red-teaming. This process allows for a comprehensive assessment of its susceptibility to various failures, including prompt injection, toxicity generation, data leakage, and false reasoning. Garak runs a series of probes which directly interact with the language model to single out vulnerabilities, using various detection methods to assess whether a model has passed or failed a probe. Garak runs via a command line interface and can be installed as a Python package. It integrates easily with the most popular LLM providers and is fairly easy to set up and use. You can learn more about Garak and its use cases through their comprehensive documentation.

Implementing Guardrails for Safer AI Systems

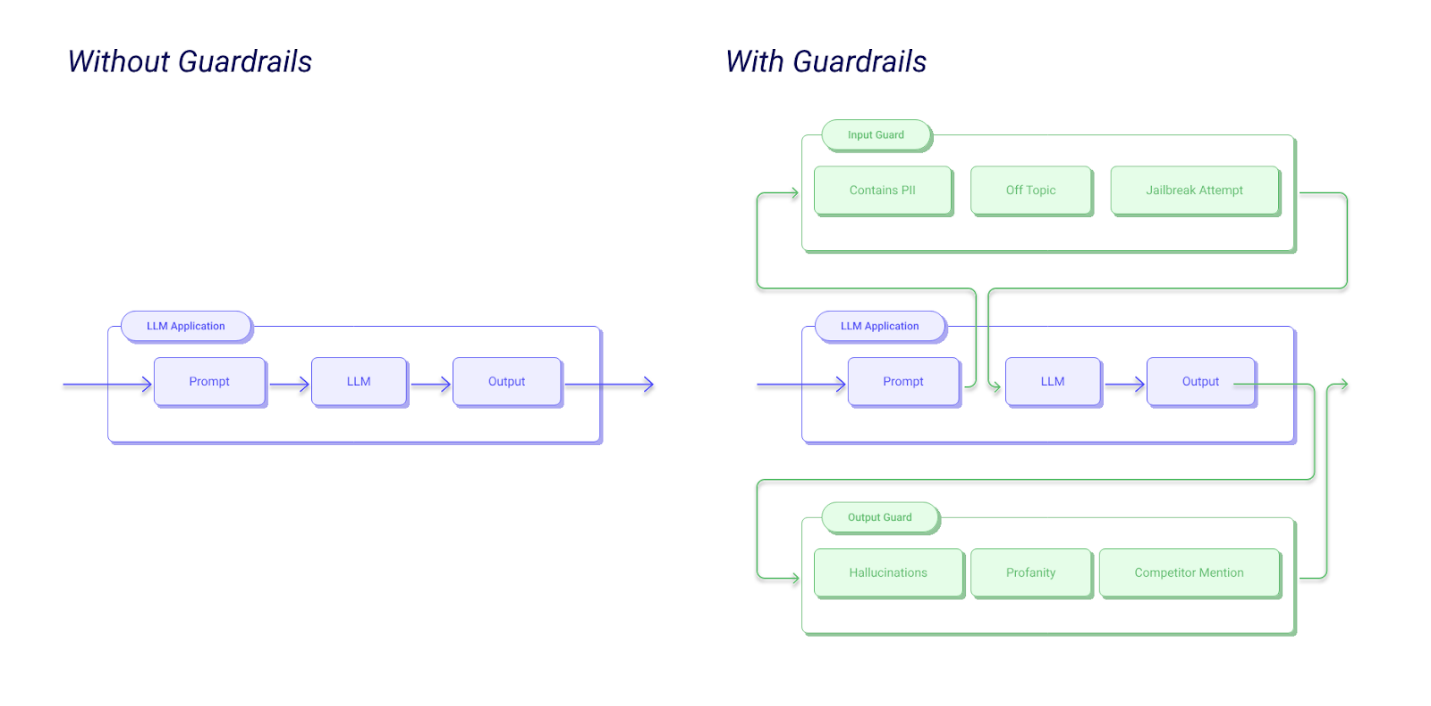

Evaluating and understanding the failures of models is the first step in identifying their weaknesses. The next step is the correction and mitigation of these failures. A major component in achieving this alignment is the implementation of guardrails within AI systems. For LLMs, rules (often referred to as guardrails in this instance) can be programmed and applied to sanitise (i.e. validate) both user input and model output. Guardrails, a popular open-source library by Guardrails AI, created a diagram that captures a typical workflow:

Guardrails AI, used under an Apache-2.0 License

Through the use of Guardrails, it is possible to monitor and facilitate the user’s interaction with the LLM, thus ensuring that its operations align with defined principles. Through the implementation of bespoke guardrails, one can mitigate the various risks posed by AI models, allowing for the creation of reliable and resilient systems. NVIDIA also released their NeMo-Guardrails toolkit which allows for programmatic guardrails to be added to conversational systems, ensuring that they remain safe and restricted to their desired domain. Through the use of either Guardrails or NeMo, it is possible to effectively guide the behaviour of LLMs, ensuring that they are not only aligned with a desired set of principles but that the risks of their failures are mitigated.

Conclusion

Generative AI has quickly become a universal part of our lives. Large language models have ushered in a new era of technology, bridging the gap between humans and computers like we have never seen before. LLMs hold incredible promise in fields as varying as marketing, finance, and healthcare. However, with this widespread power comes the need to create systems that are reliable, safe, and secure. The topics of responsible development and effective alignment of AI systems are becoming increasingly important. Thus, it is essential to understand how to leverage the necessary tools to achieve truly beneficial systems. Our team at ProCogia is actively searching for ways to further enhance the safety and performance of large language models and promote responsible AI development across a variety of sectors. Click here to read more of our blogs focused on AI.